FingerVision - Concept

FingerVision is a vision-based tactile sensor consisting of transparent soft skin and cameras. Cameras are used to detect the skin deformation which gives us force perception. Since the skin is transparent, cameras can also see through the outside, which gives us useful information of objects to be manipulated, such as in-hand pose, in-hand movement (slip), deformation, shape, and texture.

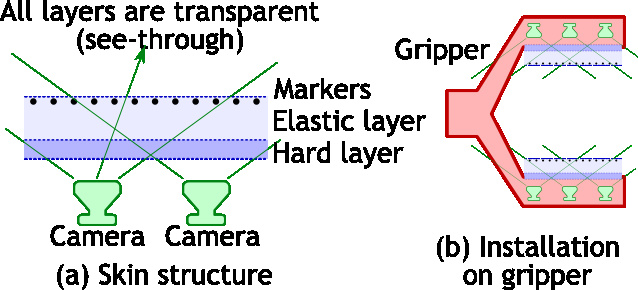

The following figure shows a conceptual diagram of FingerVision:

The skin has soft layer made with silicone, and hard layer made with acrylic. Colored (black) markers are placed on the surface of the soft layer in order to make detecting skin deformation easier.

The features of FingerVision are described as follows:

- Multimodality:

- Force distribution

- Proximity vision:

- Slip, deformation

- Object pose

- Object texture, shape, etc.

- Low-cost and easy to manufacture

- Camera is the most expensive component. We are using a USB 2.0 camera with fisheye lens, which costs $45. The total cost is like $50.

- The entire structure is simple.

- Physically robust

- Its sensing part (cameras) is isolated from the skin where external force is applied. Even if the skin is damaged, it would not affect the sensing part.

Why do we use cameras? †

Camera size is becoming smaller, and camera price is becoming cheaper: Cameras are very popular sensing device. Most of smart phones have cameras; especially front cameras are small. Cameras are also important in medical situations, such as endoscopic surgery. In such situations, smaller cameras are desirable. An example of small cameras on sale is NanEye whose size is 1 mm cube.

This trend would continue: cameras become smaller and cheaper. Thus we can expect that vision-based tactile sensors will become smaller and cheaper. Many robots will have tactile sensing.

Video processing is active research and development area: Many companies are developing technologies for video processing, such as NVIDIA, Intel, and Google. This trend would also continue.

Vision gives us much information: By processing images, we can perceive much information. For example FingerVision provides force distribution, object pose and shape, and object movement (slip, deformation). We can apply deep learning technologies to obtain more detailed information, such as an object class and segmented object parts.

RGB cameras are just an example: We are currently using RGB cameras in FingerVision, but we emphasize that we can combine it with other sensors, such as IR sensors and range finders. It would be a great idea to embed microphones, accelerometers, and strain gauges.

Refer to our publications for more details.

†

- Akihiko Yamaguchi: Assistive Utilities with FingerVision for Learning Manipulation of Fragile Objects, in Proceedings of the 19th SICE System Integration Division Annual Conference (SI2018), December 15, 2018. PDF

- Akihiko Yamaguchi: FingerVision for Tactile Behaviors, Manipulation, and Haptic Feedback Teleoperation, in Proceedings of the IEEJ International Workshop on Sensing, Actuation, Motion Control, and Optimization (SAMCON2018), IS2-4, Adachi-ku, Tokyo, Japan, March 6, 2018. PDF

- Akihiko Yamaguchi and Christopher G. Atkeson: Implementing Tactile Behaviors Using FingerVision, in Proceedings of the 17th IEEE-RAS International Conference on Humanoid Robots (Humanoids2017), Birmingham, UK, 2017. (Got Mike Stilman Award!) final-draft, Video

- Akihiko Yamaguchi and Christopher G. Atkeson: Grasp Adaptation Control with Finger Vision: Verification with Deformable and Fragile Objects, in Proceedings of the 35th Annual Conference of the Robotics Society of Japan (RSJ2017), 1L3-01, Saitama, September 11-14, 2017. final-draft, Video, Slides

- Akihiko Yamaguchi and Christopher G. Atkeson: Optical Skin For Robots: Tactile Sensing And Whole-Body Vision, in the RSS17 Workshop on Tactile Sensing for Manipulation, Robotics: Science and Systems, Massachusetts, USA, 2017. pdf

- Akihiko Yamaguchi and Christopher G. Atkeson: Combining Finger Vision and Optical Tactile Sensing: Reducing and Handling Errors While Cutting Vegetables, in Proceedings of the 16th IEEE-RAS International Conference on Humanoid Robots (Humanoids2016), Cancun, Mexico, 2016. final-draft