DCOB: Action Space for Motion Learning of Large DoF Robots

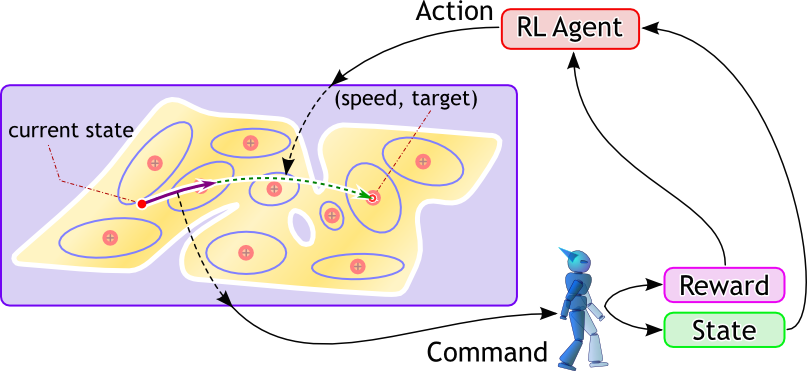

Reinforcement Learning (RL) methods enable a robot to acquire behaviors only from its objective expressed by a reward (objective) function. However, handling a large dimensional control input space (e.g. a humanoid robot) is still an open problem.

The aim of this research is to develop a suitable action space for RL methods with which the robot can learn good performance motions quickly.

We proposed a discrete action sets named DCOB. The DCOB stands for an action Directed to the Center Of a Basis function. It is generated from the given basis functions (BFs) for approximating a value function. Though the DCOB is a discrete set, it has an ability to acquire motions of high performance.

As an extension, WF-DCOB was proposed. It utilizes the wire-fitting to learn within a continuous action space which the DCOB discretizes. Thus, the WF-DCOB has a potential to acquire higher performance than the DCOB. But, so far, the performance of the acquired motions are almost the same, due to the instability of learning the wire-fitting.

Application to Motion Learning of Robots

Learning Jumping

This is an application of the DCOB to learning jumping of a humanoid robot on simulation. As an RL method, the Peng's Q(λ)-learning is used.

In the early stage of learning, the robot acts randomly because it learns from scratch (i.e. no prior knowledge).

After learning, the robot acquires a jumping motion.

Learning Crawling

The DCOB is applicable to the various motions. This is an example of learning crawling.

In the early stage of learning, the behavior of the robot is quite similar to the learning jumping case. This is because the robot also learns from scratch.

After learning, the robot acquires a crawling motion.

Crawling by a Real Robot

Only simulation? No! Our method is applicable to a real robot. We apply the DCOB to a crawling task of a real spider robot (Bioloid, made by ROBOTIS).

This is a learning phase. The robot also learns from scratch (without simulation!).

After learning, the robot is successful to acquire a crawling motion (after around 30 minutes).

Another viewpoint:

Video for AURO2013

Implementation

DCOB is implemented in SkyAI. You can download and test it!

Related Papers

- Akihiko Yamaguchi, Jun Takamatsu, and Tsukasa Ogasawara:

DCOB: Action space for reinforcement learning of high DoF robots,

Autonomous Robots, Vol.34, No.4, pp.327-346, May, 2013. [PDF] [Springer] [Video] - Akihiko Yamaguchi, Jun Takamatsu, and Tsukasa Ogasawara:

Constructing Action Space from Basis Functions for Motion Acquisition of Robots by Reinforcement Learning,

Journal of the Robotics Society of Japan, Vol.29, No.1, pp.55-66, 2011. (in Japanese) [PDF] - Akihiko Yamaguchi, Jun Takamatsu, Tsukasa Ogasawara:

DCOB: Constructing Action Space from Basis Functions for Motion Acquisition of Robots by Reinforcement Learning ---Application to Crawling Task of Actual Small Size Multi-link Robot---,

in Proceedings of the 2010 JSME Conference on Robotics and Mechatronics (ROBOMEC2010), 2P1-G10, Asahikawa, Japan, June, 2010. (in Japanese) [PDF] - Akihiko Yamaguchi, Jun Takamatsu, and Tsukasa Ogasawara:

Constructing Continuous Action Space from Basis Functions for Fast and Stable Reinforcement Learning,

in Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN2009), pp.401-407, Toyama, Japan, 2009. [final-draft] - Akihiko Yamaguchi, Jun Takamatsu, and Tsukasa Ogasawara:

Constructing Action Set from Basis Functions for Reinforcement Learning of Robot Control,

in Proceedings of the 2009 IEEE International Conference on Robotics and Automation (ICRA2009), pp.2525-2532, Kobe, Japan, May, 2009. [final-draft] - Akihiko Yamaguchi, Norikazu Sugimoto, and Mitsuo Kawato:

Reinforcement Learning with Reusing Mechanism of Avoidance Actions and its Application to Learning Whole-Body Motions of Multi-Link Robot,

Journal of the Robotics Society of Japan, Vol.27, No.2, pp.209-220, 2009. (in Japanese) [PDF]