Robotics Institute

Carnegie Mellon University (CMU)

E-mail : info [at] akihikoy [dot] net

I received the BE degree from the Kyoto University (Kyoto, Japan) in 2006 under Prof. Takashi Matsuyama, and the ME and the PhD degrees from Nara Institute of Science and Technology (NAIST; Nara, Japan) in 2008 and 2011 respectively under Prof. Tsukasa Ogasawara; during the master's course, I was also guided by Prof. Mitsuo Kawato at Computational Neuroscience Laboratories, Advanced Telecommunications Research Institute International (ATR). From April 2010 to July in 2011, I was with NAIST as a Japan Society for the Promotion of Science (JSPS) Research Fellow. From August 2011 to April 2015, I was with NAIST as an Assistant Professor of the Robotics Laboratory in the Graduate School of Information Science.

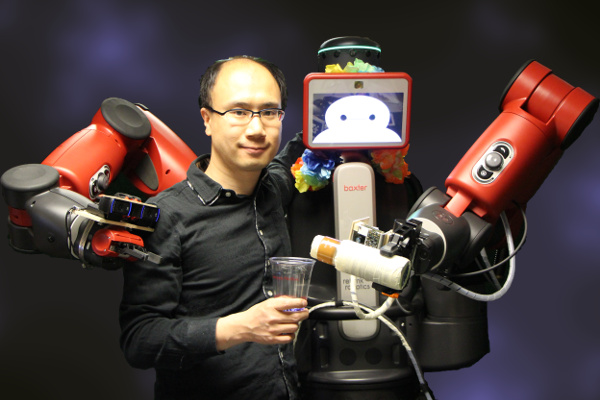

From April 2014 to April 2015, I was working with Prof. Christopher G. Atkeson as a visitor scholar of the Robotics Institute, Carnegie Mellon University (CMU; Pittsburgh, USA). After April 2015 to present, I am a Postdoctoral Fellow of the same institute working with Prof. Atkeson.

My complete biography is HERE.

My complete publication list is HERE.

The latest version of research statement: ResearchStatement.pdf.

The latest version of teaching statement: TeachStatement.pdf.

Research statement

Please read the latest version.

My ultimate research goal is to make a superhuman artificial intelligence that will be a computer program of robots capable to reason about behaviors to serve humans. Especially I am interested in methods for learning mechanism of humans, including reinforcement learning, machine learning, deep learning, and so on. Practicalness matters to me. I am always thinking challenging robotic domains including deformable object manipulation like pouring and cutting vegetables, and cooking. My research involves practical case studies with robots such as PR2 and Baxter, making theories based on them, and verification of the theories on practical domains.

Previous and ongoing work

Learning motions from scratch

When I was a PhD student, popular reinforcement learning methods for robots were direct policy search such as Natural Actor Critic and PoWER. However they were using imitation learning to achieve practical robotic tasks such as ball-in-cups. I wanted to create a practical method with which robots can acquire motions with less prior knowledge about the domains (i.e. without human demonstrations). My solution was creating many primitive actions as an action space, and learning policies that map from a current state to an adequate primitive action. The proposed action space was named DCOB with which a 6-legged spider robot could learn crawling motion in 20 min with no-simulations and no-demonstrations.

I could design a variety of motions only by changing the reward functions.

Examples are in this motive.

Even with DCOB, I needed to restrict the whole degrees of freedom (DoF) of robots to up to 7. In order to use the full-capability of robots, I created a method with which robots can learn gradually from a simple configuration (lower DoF) to a detailed configuration (higher DoF). This idea is very similar to humans learning: they start to learn on a most effective direction to tasks. Such an example can be seen in an infant learning model (e.g. Taga et al. 1999). The method was named Learning Strategy Fusion. Later, this framework was extended to learn policies across multiple types of environments.

Details are found in HERE (DCOB) and HERE (learning strategy fusion).

Related papers (selected):

- Akihiko Yamaguchi, Jun Takamatsu, and Tsukasa Ogasawara:

DCOB: Action space for reinforcement learning of high DoF robots,

Autonomous Robots, Vol.34, No.4, pp.327-346, May, 2013. [PDF] [Springer] [Video] - Akihiko Yamaguchi, Jun Takamatsu, and Tsukasa Ogasawara:

Learning Strategy Fusion for Acquiring Crawling Behavior in Multiple Environments,

in Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO2013), pp. 605-612, Shenzhen, December, 2013. [final-draft] [IEEE] - Akihiko Yamaguchi, Jun Takamatsu, and Tsukasa Ogasawara:

Learning Strategy Fusion to Acquire Dynamic Motion,

in Proceedings of the 11th IEEE-RAS International Conference on Humanoid Robots (Humanoids2011), pp.247-254, Bled, Slovenia, 2011. [final-draft]

Case study of pouring

The above methods are however not capable of learning more complicated behaviors such as pouring liquids, cutting vegetables, and so on. The problem is not only the large state-action space. Unlike the motions shown above, the solutions of these behaviors exist only on small manifold of state-action space, and they involve discrete changes of dynamical modes. For example we can move objects only during we grasp it. Learning these skills from scratch would take huge amount of time. Adjusting learning parameters (such as learning gain) and initial guess of policy parameters would be extremely hard. Learning from human demonstrations are promising, but typically learned policies do not generalize.

Thus I started a case study of a general pouring task under the learning from demonstration framework. The task is that a robot (PR2) moves material, e.g. liquids, ketchup, and coffee powder, from a container to a container. In order to handle many variations of materials and containers, I created a various skills, such as pouring by tipping, shaking, and tapping. An important finding is that some fundamental elements, such as planners, optimizers, policy learners, feature points, perceptions, models, evaluation (cost/reward) functions, and knowledge database (especially a skill library) are necessary to be unified. My work done here is a unification of simple methods, but that whole learning system is an important guidance of my (or other researchers) future research.

Related papers:

- Akihiko Yamaguchi, Christopher G. Atkeson, and Tsukasa Ogasawara:

Pouring Skills with Planning and Learning Modeled from Human Demonstrations,

International Journal of Humanoid Robotics, Vol.12, No.3, pp.1550030, July, 2015. [final-draft] [World Scientific] [Video] - Akihiko Yamaguchi, Christopher G. Atkeson, Scott Niekum, Tsukasa Ogasawara:

Learning Pouring Skills from Demonstration and Practice,

in Proceedings of the 14th IEEE-RAS International Conference on Humanoid Robots (Humanoids2014), pp. 908-915, Madrid, 2014. [final-draft]

Model-based reinforcement learning with neural networks on hierarchical dynamic system

Based on the pouring case study, I and Chris Atkeson established a practical reinforcement learning framework. Model-based reinforcement learning (RL) is an important approach to combine existing (analytical) models and learned models. However modeling error is a big issue in reasoning about the policies in a longer time scale. In our framework, we use task-level modeling of dynamical systems which is an abstracted form of dynamics. For example we would ignore the detailed dynamics during grasping, and model a map from input state and action parameters to output state. This idea works even in complicated domains like pouring. Another idea is using probabilistic representations of models. For this purpose, I extended neural networks to be capable of modeling and propagating probability distributions. I also studied stochastic differential dynamic programming (DDP) for optimizing action parameters over entire task. This model-based RL is my version of deep reinforcement learning.

Related papers:

- Akihiko Yamaguchi and Christopher G. Atkeson:

Differential Dynamic Programming for Graph-Structured Dynamical Systems: Generalization of Pouring Behavior with Different Skills,

in Proceedings of the 16th IEEE-RAS International Conference on Humanoid Robots (Humanoids2016), Cancun, Mexico, 2016. [final-draft] [Video] - Akihiko Yamaguchi and Christopher G. Atkeson:

Model-based Reinforcement Learning with Neural Networks on Hierarchical Dynamic System,

in the workshop on Deep Reinforcement Learning: Frontiers and Challenges in the 25th International Joint Conference on Artificial Intelligence (IJCAI2016), New York, 2016. [final-draft] [Slides] - Akihiko Yamaguchi and Christopher G. Atkeson:

Neural Networks and Differential Dynamic Programming for Reinforcement Learning Problems,

in Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA2016), pp. 5434-5441, Stockholm, Sweden, May, 2016. [final-draft] [Video] - Akihiko Yamaguchi and Christopher G. Atkeson:

Differential Dynamic Programming with Temporally Decomposed Dynamics,

in Proceedings of the 15th IEEE-RAS International Conference on Humanoid Robots (Humanoids2015), pp. 696-703, Seoul, 2015. [final-draft] [Video] - Akihiko Yamaguchi and Christopher G. Atkeson:

A Representation for General Pouring Behavior,

in the workshop on SPAR in the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS2015), Hamburg, 2015. [pdf]

Teaching statement

Please read the latest version.

Through teaching in classes and researches in a laboratory, I would like to give students not only knowledge but also several "meta" skills that are useful in their future jobs; e.g., thinking way, solving problems, finding new problems, and creating new things.

Teaching in classes

My basic strategy to teach scalable and versatile knowledge for students to solve their future problems is (1) teaching small pieces of knowledge and their unification theories, and (2) doing as many exercises as possible for small pieces of knowledge and doing as large project as possible for unification theories. Decomposing complicated knowledge into small pieces and their unification theory is useful for both understanding the complicated knowledge and leveraging the knowledge. Generally to say, small piece of knowledge has wider scalability and versatility. Simple but various type of exercises are suite for learning small pieces of knowledge (e.g. test code of a learning theory). On the other hand, a large project is good to think how to unify the small pieces. Such a project is students' own one, so there is no solution to it.

From 2011 to 2014, I was engaged in a project to design a curriculum for robotics course. Under this project, I designed some class contents (mainly exercises) based on the above strategy, and verified them with master course students. Course contents and materials are found in the course website:

http://robotics.naist.jp/edu/text/ (Click "English" link in upper right)

Teaching in laboratory

My principle policy to teach in laboratory is treating each student as an independent researcher. By this, each student can feel responsibility on his/her research, and thus, try to think by oneself.

In addition to this, I supervise students by taking into account their types. According to a coaching theory, there are typical four types: controller, promoter, analyzer, and supporter types. Suitable supervising style depends on the type; for example, putting a position to lead a project is suite for the controller type, while the supporter type prefers to help other members. Considering each type is one key idea.