DQL+: Reusing Memory of Dangerous Actions

In learning a new behavior, we, humans, are reusing memory already learned before. Especially, the memory of dangerous actions should be reused preferentially. For example, actions that lead to falling down should be memorized and reused in learning new motions.

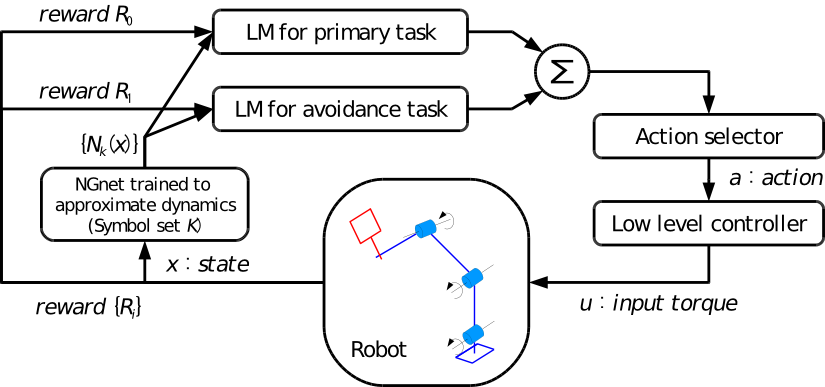

The aim of this research is to develop an architecture to memorize and reuse the dangerous actions. The proposed method was developed on the reinforcement learning framework, and named DQL+ which means Decomposed Q-Learning+. The DQL+ is a kind of separative learning of an action value function (AVF) where some sub-AVFs are learned separately. One of the sub-AVFs memorizes the dangerous actions, which will be reused in future learning.

There was a conventional separative learning method proposed by Russell et al. [ICML 2003]. The advantage of the DQL+ is that the DQL+ is more suitable in reusing a sub-AVF. The following movie demonstrates the advantage of the DQL+ in reusing memory of the dangerous actions (falling down) during learning a tennis serve.

Left panel is the DQL+, and the right panel is the Russell's method. Each method is reusing a sub-AVF that memorized the dangerous actions (memorized before this learning tennis serve task). The bottom-left bar of each panel shows the cumulative damage.

As you can see, the increasing speed of the damage bar of the DQL+ is slower than that of the Russell's method. This is possible because the DQL+ can effectively reuse the memory of the dangerous actions more than the Russell's method.

Related Papers

- Akihiko Yamaguchi, Norikazu Sugimoto, and Mitsuo Kawato:

Reinforcement Learning with Reusing Mechanism of Avoidance Actions and its Application to Learning Whole-Body Motions of Multi-Link Robot,

Journal of the Robotics Society of Japan, Vol.27, No.2, pp.209-220, 2009. (in Japanese) [PDF]